Adaptive service assurance in 5G networks

With the adoption of 5G, data traffic will increase by 700% in the next 4 years (GSMA, 2020). Tier 1 U.S. operators tell EXFO this growth outpaces the capabilities of their current service assurance systems. They predict that they will need to monitor by sampling, since they won’t have today’s complete visibility into control plane and user plane traffic. Operators are drowning in big data while losing access to insight at a time when they need to simplify operations and improve business outcomes.

By their own estimates, service providers expect to lose sight of 80% of what’s happening in their networks, at a time when virtualization and software-defined networking kick in to create dynamic, orchestrated networks that will change faster than humans can react. The day has come when the user experience can break down and we don’t even know it.

Towards the end of 2019, service providers started outlining their vision for service assurance. Our customers began to specify what they will need to remain in control of tomorrow’s complex networks and services.

Their requirements are nothing short of transformative. To summarize their call to action, service assurance of the (near) future will rely on:

- Extensive use of data collected by network functions—traces, events, logs

- Telemetry feeds from virtualized infrastructure, functions and NFVI (e.g. OpenTracing)

- Real-time data streaming to allow for closed-loop automation—scalable to 30 billion messages per hour processed within 100 ms

- Open plug-and-play functionality allows best-in-breed solutions to coexist so service providers avoid vendor lock-in

- Workflow management and open automation support based on ONAP

- Ability to immediately resolve an individual user experience, whether it be a customer or IoT device

- Ability to do all of this adaptively—the right data, only as needed—to control compute, energy and network consumption

This is quite a departure from yesterday’s service assurance, where measuring everything all the time is considered an essential requirement. Missing anything is considered a system flaw.

What’s underpinning this change of direction? Why are current systems reaching their limits?

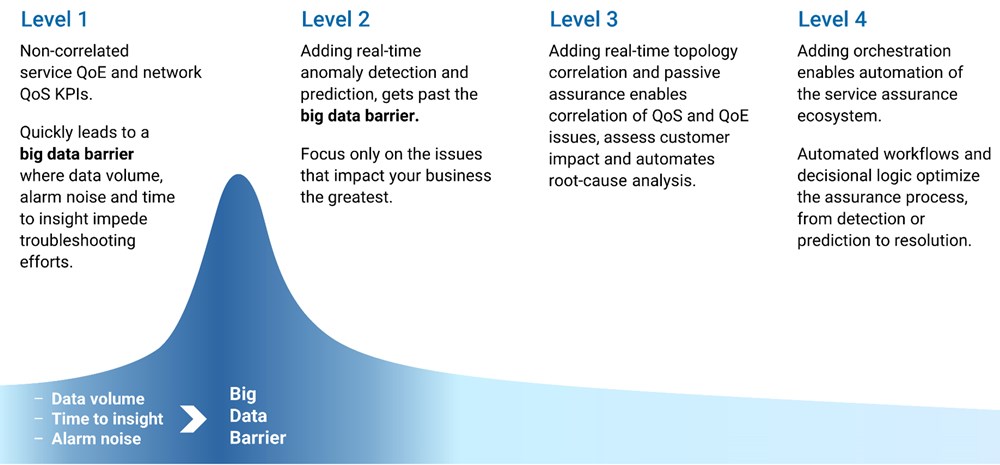

Big data is a big part of the problem. Service assurance systems based on big data are becoming too slow to respond with critical insight. As data lakes fill up, the time it takes to find the “small data” that matters is becoming longer, at a time when real-time information about subscriber experience and network performance are needed for orchestration and closed loop automation.

Thankfully, the big data barrier can be overcome with real-time streaming analytics and machine-learning-based anomaly detection methods, fast time-series AI-driven diagnostics, and predictive analytics. Here’s a quick summary of what it takes to get from today’s static data to an adaptive, automation-centric operations environment.

The journey to adaptive service assurance

Given that we now know the challenge, service assurance has to adapt.

Literally.

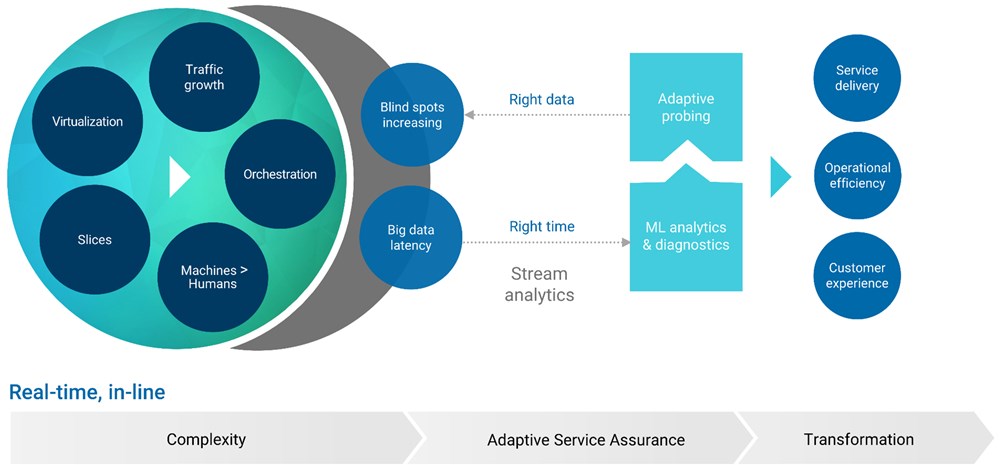

At EXFO we call our new approach adaptive service assurance. This means measuring the right data, at the right time, in context of what needs to be diagnosed, optimized, or analyzed.

It goes beyond our current comprehensive monitoring capabilities, to integrating and analyzing data from third-party systems, existing big-data repositories (e.g., DCAE / NWDAF), and considering new types of non-network data like the weather, traffic patterns, and sentiment in social media.

To manage this, service assurance has to embrace the new cloud-native, API-driven orchestrated architecture that will power 5G standalone networks, while still being able to support the 3G, 4G, and 5G NSA networks that will continue to coexist for the foreseeable future.

Backward compatibility is key, but our blueprint for adaptive service assurance has to carefully align with 5G SA, share the same attribute:

- 5G SA employs a new, fully virtualized cloud-native core. The vEPC and network functions will be orchestrated in real time and will be distributed across centralized and edge computers.

- 5G SA will deliver applications over network slices that can be controlled by third parties using APIs. It will be a programmable mobile cloud—a computing and networking platform-as-a-service with ultra-low-latency, high-reliability communications (URLLC) capabilities.

- 5G SA will scale to manage millions of simultaneously connected devices and will monitor their location to within centimeters.

- Application and network segment latency and service assurance analytics by class of device are required.

This is the context operations teams will face. And that’s why engineering teams are calling for service assurance designed for this dynamic world. To form a comprehensive view of network and cloud infrastructure, applications, functions, slices and “users,” engineering teams will need a mix of active and passive monitoring probes and agents, telemetry data about network events.

To overcome the need to collect and analyze all this big data, all the time, service assurance must be responsive and adaptive. It will need to intelligently direct probes and agents to scale, measure, collect and analyze the right data at the right time to support and assure diverse 5G SA use cases.

Adaptive service assurance overcomes the challenges ahead

Using the latest real-time data frameworks, AI analytics and automation will all be required to achieve this. Big iron and monolithic VNFs that were so modern just a few years ago need to fit into containers, and scale cloud-natively. The results will amaze anyone who uses several systems each day to keep networks going.

Adaptive service assurance will overcome big data overhead by employing the most efficient combination of monitoring, testing, real-time streaming analytics and AI to provide the right information from live data when it’s needed, instead of storing swamps of data that rarely gets used. In other words, when you face too much data, it turns out less is more.